Deepseek Moe A New Generation Of Fast And Intelligent Models

The world of artificial intelligence (AI) is constantly evolving, and Mixture of Experts (MoE) models are providing a new dimension. Deepseek MoE is an advanced AI model that is capable of working with high speed, high efficiency, and intelligence. This model uses advanced algorithms and MoE technology to make AI models more effective.

Deepseek MoE is essentially a part of the Deepseek platform, which is working on cutting-edge technologies to improve AI and machine learning. In this article, we will discuss the details of Deepseek MoE, its benefits, and its potential impact in the world of AI.

What is Deepseek MoE?

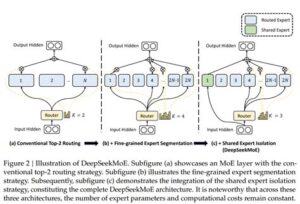

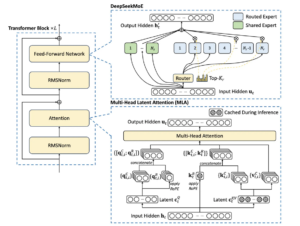

Deepseek MoE is an artificial intelligence model based on a Mixture of Experts (MoE) that consists of several distinct modules (experts). Each of these modules specializes in specific tasks and, with the help of a central gateway network, decides which module to activate.

This model is more efficient and faster than traditional AI models because it activates only the necessary modules, which reduces computational resources and improves performance.

What is Mixture of Experts (MoE) technology?

MoE is an advanced AI neural network architecture that consists of multiple “Expert Networks”. When an input is fed into the model, a Gate Network decides which experts to activate.

This approach makes AI models:

- More scalable, meaning they work better on large data sets.

- More efficient, because they only activate relevant modules, avoiding unnecessary processing.

- Enables faster performance, because more complex calculations take less time.

Advantages of Deepseek MoE

1. Fast processing

Deepseek MoE mobilizes specific experts for specific problems, which allows it to deliver results many times faster than typical AI models.

2. Efficient resource utilization

In traditional models, all neural networks work together, which consumes more computational resources. Deepseek MoE activates only specific modules, which requires less energy and resources.

3. More intelligent and effective learning process

Deepseek MoE continuously learns and improves its experts over time, making it more intelligent and effective.

4. Excellent performance on large languages and data sets

Deepseek MoE works best on large data sets and delivers outstanding results in natural language processing (NLP), machine translation, and other complex AI tasks.

5. Widely used in industry

This model can be used in various industries such as education, research, data analysis, software development, and automated decision making.

How does Deepseek MoE work?

Deepseek MoE basically works in the following steps:

- Input Analysis: User input (text, data, or query) is fed into the model.

- Expert Selection: The AI gate network decides which expert modules are best suited for a particular task.

- Processing: The selected experts perform the task and produce the result.

- Final Output: The model provides the answer or solution to the user.

This method provides more intelligence, speed, and effective results than traditional models.

Deepseek MoE Potential Uses

Education:

Supports AI-assisted learning, automated annotation, and personalized teaching.

Research:

Useful in analyzing large amounts of data and solving complex research problems.

Business:

Great for customer support, data analytics, and automated decision-making.

Cybersecurity:

Supports threat analysis and AI-based security measures.

Software Development:

Useful in AI-based code generation and automation.

Basic technical description of Deepseek MoE

Deepseek MoE is a Mixture of Experts (MoE) model that consists of the latest version of Artificial Neural Networks (ANNs). It consists of several “experts” (expert modules) that specialize in specific problems, and a Gate Network (Gate Network) that decides which modules to activate for a particular problem.

This method is more efficient and faster than traditional Dense Neural Networks (DNNs), because it activates only the necessary modules, while other neural networks remain inactive, saving energy and computational resources.

In-depth analysis of MoE technology

1. Formation of expert modules

Deepseek MoE has different expert modules, which are trained for specific tasks. For example:

- Some modules are experts in Natural Language Processing (NLP).

- Some specialize in mathematical calculations.

- Some modules specialize in image recognition.

When a user input comes in, the model automatically selects the relevant modules, making the solution faster and more accurate.

2. Importance of Gate Network

The Gate Network is the most important component of MoE, because it decides which experts to activate. It is a learnable module, which gets better with experience and is able to make more effective decisions.

It activates the best modules by analyzing the different weights of the neural network, which reduces the chances of error.

3. Sparse Computation

In traditional AI models, all neural networks work together, which consumes more computing power and energy. Deepseek MoE uses Smart Sparse Computation, meaning only a few specific modules are activated, which:

- Consumes less energy.

- Enables faster processing.

- Achieves better scalability.

Key Benefits of Deepseek MoE

1. Excellent Scalability

Deepseek MoE can work efficiently from small data sets to large-scale data processing, making it suitable for advanced applications such as Big Data Analytics.

2. High performance at low cost

Due to the low requirement of computing resources, Deepseek can operate at low cost while maintaining the performance of large models, making it suitable for enterprises and research institutions.

3. Automatic improvement of AI models

Deepseek MoE continuously learns from real-time data and improves itself, making it more intelligent over time.

4. Modular Design

Deepseek modular structure allows modules to be added or removed according to different industries and needs, making it more flexible and upgradable.

Conclusion

Deepseek is a revolutionary AI model that is setting new paths for the future of artificial intelligence with speed, intelligence, and efficient use of computational resources. MoE technology is ushering in a new era in the world of AI, capable of solving big problems more effectively and intelligently.

If you are interested in advanced AI, data science, or machine learning, Deepseek MoE can definitely be an interesting and useful model for you.

FAQS

1. What is Deepseek ?

Deepseek MoE is an advanced Mixture of Experts (MoE)-based artificial intelligence (AI) model that is capable of solving problems quickly, intelligently, and efficiently.

2. How does the MoE model work?

The MoE model consists of multiple expert modules (Experts), and a gate network decides which modules to activate for a specific task, which improves speed and efficiency.

3. How is Deepseek MoE better than other AI models?

This model uses only specific modules, which consumes less computing resources, while other AI models run the entire network simultaneously, which takes more time and energy.

4. In what areas can Deepseek MoE be used?

It can be used in areas such as natural language processing (NLP), data analysis, financial modeling, machine vision, education, and software development.

5. What is the role of the Gate Network?

The Gate Network is an intelligent component of the model that analyzes the input data and decides which expert modules to activate in order to achieve more effective and faster results.

6. How fast does Deepseek work?

This model is several times faster than traditional AI models, as it activates only the necessary modules, avoiding unnecessary processing.

7. Is Deepseek MoE capable of self-learning?

Yes, this model improves over time through Machine Learning and increases the accuracy of its decisions.

8. On which platforms can Deepseek be used?

This model can be used as a cloud service, data center, and on-device AI, making it suitable for a variety of applications.

9. Is Deepseek energy efficient?

Yes, it uses Sparse Computation, meaning it only activates the necessary modules, which consumes less energy and paves the way for eco-friendly AI models.

10. Is Deepseek available to consumers?

Deepseek MoE is currently being developed for research, enterprise, and developers, and may soon be rolled out to more consumers.

Leave a Reply