When Was Deepseek R1 Released

Introduction

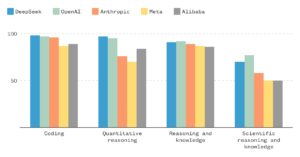

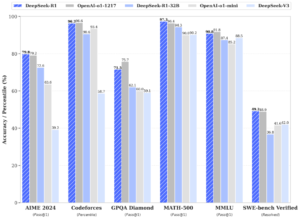

If you are interested in artificial intelligence (AI), you must have heard of DeepSeek R1. It is an advanced open-source AI model that performs particularly well in areas such as mathematics, coding, and natural language processing (NLP). Deepseek R1 Released

In this article, we will discuss the release of DeepSeek R1, its features, technical specifications, comparison with other models, and future expectations in detail. So, let’s talk about this advanced AI model!

- What is DeepSec R1?

- Its importance and comparison with other AI models

Salient Features of DeepSec R1

DeepSec R1 is not just an ordinary AI model, but several advanced technologies have been added to it, which make it unique from other models.

Advanced Mixture of Experts (MoE) Technology

MoE is actually a smart AI architecture that provides more powerful results by consuming less energy.

- The computational cost is lower than that of ordinary models.

- Specific “expert” neural networks are activated for each question, which results in more accurate answers.

Provides better performance on GPU and TPU.

Transformer Architecture

The basic structure of DeepSec R1 is Transformer-based, which works on the Self-Attention Mechanism like GPT-4 and other advanced AI models.

- Uses Multi-Head Attention, which better understands the relationships between words.

- Processes information very quickly with the help of Parallel Processing.

Better results through Reinforcement Learning (RLHF)

DeepSec R1 uses Reinforcement Learning from Human Feedback (RLHF), which allows the model to learn better based on human feedback.

- More realistic and natural responses.

- Greater accuracy in math and coding.

- The ability to make artificial intelligence more safe and effective.

Open source and MIT license

- This model is open source, meaning you can use it for free.

- Provides new customization and model training opportunities for developers and researchers.

DeepSec R1 release date

- First look: November 20, 2024 (light preview)

- Full version: January 20, 2025

- Technical specifications of DeepSec R1

Parameters and model size

- The most advanced AI model with 671 billion parameters

- Large-scale data processing capability

MoE (Mixture of Experts) architecture

- Multiple “experts” within the model

- Faster and more effective results with less computing power

Transformer architecture

- Advanced Self-Attention Mechanism

- Ability to process large amounts of information quickly

Reinforcement Learning (RLHF)

- Ability to learn from human feedback

- Provide better and more accurate answers

Salient features of DeepSec R1

- High performance in mathematics

- Excellent support for coding

- Support for multiple languages Capability

- Improved natural dialogue and text generation

Open source release and licensing

- Available for free under the MIT license

- Easy customization for researchers and developers

Potential areas of use for DeepSec R1

- Education (assisting in learning math and science)

- Software development (code generation and automation)

- Business intelligence (data analysis and prediction)

- Natural language processing (NLP)

Potential limitations and challenges of DeepSec R1

- Requirement of more hardware resources

- Infrastructure complexity for large models

- Potential ethical and security concerns

Future updates and developments for DeepSec

- Better training data

- Improvements in multi-GPU and cluster setup

- Development of low-power AI models

Where can DeepSec R1 be used?

This AI model could revolutionize several areas, including:

- Education: Helping students and teachers learn math, science, and language.

- Software development: Automated code generation and debugging.

- Business analytics: Market data analysis and future forecasting.

- Natural language processing (NLP): Powerful models for chatbots, translation, and summarization.

Potential Challenges of DeepSec R1

Every AI model has its challenges, including:

- High computing power requirement: MoE architecture is fast, but it still requires high-end hardware.

- Data dependency: AI models depend on good, quality data. If the data is substandard, the answers can also be weak.

- Ethical and security concerns: Such as misinformation, bias, and privacy issues. Deepseek R1 Released

Future Development of DeepSec R1

The world of AI is developing very rapidly, and DeepSec R1 is also expected to improve even more.

- Further improvements in Multi-GPU and Cluster Setup

- Development of Lightweight Models with Low Power Consumption

- More Improved Reinforcement Learning Updates

- More Customization for Better NLP and Coding

Conclusion:

- DeepSeek R1 is a revolutionary step in the world of artificial intelligence, especially because of its open source and MoE technology.

- If you are interested in AI, this model is perfect for you, especially if you work on mathematics, coding, or NLP.

- This model can open a new door for AI research and development, and more updates are expected in the future.

FAQS

1. What is DeepSeek R1?

It is an advanced open-source AI model that excels in math, coding, and natural language processing (NLP).

2. When was DeepSeek R1 released?

It launched as a preview version on November 20, 2024, while the full version was released on January 20, 2025.

3. Is DeepSeek R1 open source?

Yes! It is open source under the MIT license, meaning anyone can use and modify it for free.

4. How many parameters does DeepSeek R1 have?

The model consists of 671 billion parameters, making it one of the most advanced AI models in the world.

5. In which areas does DeepSeek R1 perform best?

It delivers particularly strong results in math, scientific analysis, coding, and natural language processing.

6. What types of technologies does DeepSec R1 use?

It uses advanced technologies like Mixture of Experts (MoE), Transformer Architecture, and Reinforcement Learning (RLHF).

7. What is the biggest advantage of DeepSec R1?

Its biggest advantages are open source, low computational cost, and excellent performance in math and coding.

8. Where can I download DeepSeek R1?

You can download DeepSeek R1 from its GitHub page or official website.

Leave a Reply