Full Details Of The Deepseek Release

DeepSeek is an advanced artificial intelligence (AI) company that works on advanced linguistic models and coding AI models. The company is based in China and aims to provide open source AI models. In this article, we will take a detailed look at the release history of DeepSeek.

DeepSeek Founding and Background

DeepSec was founded in 2023 by Liang Wenfeng, who is also its current CEO. The company is owned by a Chinese hedge fund called Highflyer and is headquartered in Hangzhou, China.

DeepSeek Major Releases and Development Milestones

DeepSeek launched several major AI models from 2023 to 2024, including DeepSeek LLM, DeepSeek-MoE, DeepSeek-Math, and DeepSeek-Coder.

November 2, 2023 – Release of the first model (DeepSeek-Coder)

DeepSeek released its first model “DeepSeek-Coder”, which is used for AI-based code generation.

November 29, 2023 – Release of DeepSeek-LLM models

About a month later, a large linguistic model called DeepSeek-LLM was introduced, which is used for generative AI and NLP (Natural Language Processing).

January 9, 2024 – Release of DeepSeek-MoE models

DeepSeek-MoE (Mixture of Experts) models were released in two versions:

- DeepSeek-MoE Base

- DeepSeek-MoE Chat

- These models were designed for more efficient and faster performance, working on the basis of multi-expert AI models.

April 2024 – Launch of DeepSeek-Math models

DeepSeek-Math was released in three different versions:

- DeepSeek-Math Base

- DeepSeek-Math Instruct

- DeepSeek-Math RL

These models provide AI support for solving mathematical problems and are specifically designed for STEM fields.

May 2024 – DeepSeek-V2 Release

DeepSeek launched DeepSeek-V2 to further improve its models, providing better knowledge integration, faster response times, and more efficient NLP.

June 2024 – DeepSeek-Coder V2 Launch

This update further improved DeepSeek’s coding models and introduced new AI-powered coding tools.

September 2024 – DeepSeek-V2.5 Update

The company released an update called DeepSeek-V2.5, which included:

- More data training

- Improved transformer architecture

- Improved computational power.

December 2024 – More updates and improvements

DeepSeek-V2.5 was further improved, and the capabilities of the MoE (Mixture of Experts) model were enhanced.

November 20, 2024 – Launch of DeepSeek’s latest models

The company released new versions of its latest MoE and LLM models, which included features such as improved scaling, multi-task learning, and reduced memory usage.

DeepSeek Features and Unique Aspects

- Open Source AI Models

- Improved NLP and Coding Models

- Advanced Multi-Expert Modeling (MoE) Technology

- Specialized Models for Solving Mathematical Problems

- Improved Scalability and Multi-GPU Support

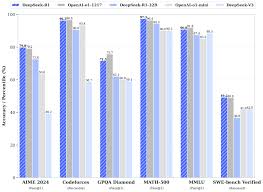

DeepSeek vs ChatGPT (GPT-4 & GPT-3.5)

Strengths of DeepSeek:

- Open source – not a commercial closed model like GPT-4

- MoE architecture – more efficient and faster

- Better at math and coding

Strengths of ChatGPT:

- More training data (175B+)

- More natural conversation (Conversational AI)

- Better integration of videos, images and code

If you want open source, low memory, and the speed of MoE, DeepSeek is great. But if you want broader data coverage, better conversation, and multimodality, ChatGPT is the better option.

DeepSeek vs Gemini (Google)

Strengths of DeepSeek:

- Faster due to MoE model

- Open source – suitable for free research and development

- Better training for NLP and coding

Strengths of Gemini:

- Multimodal (videos, images, text)

- Better search and reasoning

- Google’s infrastructure – more powerful

1DeepSeek is more lightweight and open source, while Gemini is more powerful and better for vision/video. 2DeepSeek is better if you need research, coding, or math, but Gemini is better if you need multimodal integration.

DeepSeek vs LLaMA (Meta)

DeepSeek strengths:

- MoE architecture – faster and more efficient

- Better mathematical modeling

- Better in-context learning

LLaMA strengths:

- Low memory footprint (LoRA, QLoRA)

- Best open source chat model

- Broader developer support than ever

DeepSeek is better at math, coding, and speed, while LLaMA is better for lightweight NLP-based chat models.

What is MoE?

Mixture of Experts (MoE) is an advanced neural network architecture in which multiple “expert neural networks” work together. In typical Transformer models, all neural networks are active at the same time, while MoE models activate only a select few neurals, which consumes less energy and achieves higher performance.

How MoE works

- The MoE model has a “Router” neural network, which activates only a few modules (Experts) based on the input data.

- In this way, the model uses only the necessary neural network, which reduces the need for GPU/TPU and the model works faster.

- MoE makes even a large model feel lightweight because it does not activate the entire network every time.

Features of MoE

- Higher efficiency – MoE models use less computational power.

- Higher scalability – MoE models like DeepSeek can be easily trained even on very large sizes.

- Better learning – Different “Experts” neural networks are experts on different types of problems.

- Higher efficiency at lower cost – Since the entire model does not need to be activated, power and computation are saved.

Transformer Architecture – The Basics of DeepSeek

The core architecture of DeepSeek is based on the Transformer neural network. Transformer models work on the Attention Mechanism, which is more efficient than previous NLP models such as RNNs and LSTMs.

What is a Transformer?

The Transformer model works on the Self-Attention Mechanism, which means that the model can better understand the relationship between different words in any sentence or code.

- Self-Attention Mechanism – The model examines the relationship of each word to all other words to understand the context.

- Multi-Head Attention – Interprets different words in a sentence from different angles, to achieve better understanding.

Position Encoding – Additional data is used to understand the order of words in a sentence.

Transformer Strengths

- Better Context Awareness – Works better on longer sentences or codes.

- Faster – Since it uses Parallel Processing, it is faster than other models.

- Better language understanding – DeepSeek’s Transformer-based MoE models can understand more natural speech, math, and coding.

Conclusion

- DeepSeek is an open-source, fast, and MoE-based AI model

- There are several options for running it locally, such as Hugging Face, Quantization, and APIs

- DeepSeek can be a great alternative to ChatGPT, Gemini, and LLaMA in various situations

Leave a Reply